Today I spent some time connecting two languages that are finding themselves popular for solving wildly different kinds of problems. I decided I wanted to see how easy it was and if it was a workable solution if you would want to take advantage of the strengths of both languages. The result is really up to you. My 15 minutes experiment is what I’ll discuss here.

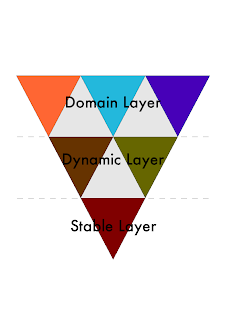

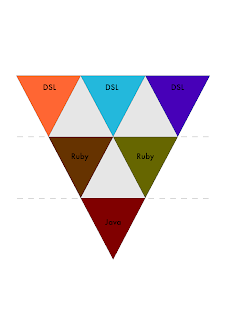

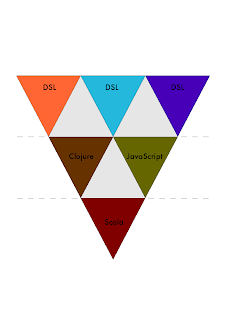

If you’d like, you can see this as a practical example of the sticky part where two languages meet, in language-oriented programming.

The languages under consideration is Ruby and Erlang. The prerequisite reading is this eminent article by my colleague Dennis Byrne: Integrating Java and Erlang.

The only important part is in fact the mathserver.erl code, which you can see here:

-module(mathserver).

-export([start/0, add/2]).

start() ->

Pid = spawn(fun() -> loop() end),

register(mathserver, Pid).

loop() ->

receive

{From, {add, First, Second}} ->

From ! {mathserver, First + Second},

loop()

end.

add(First, Second) ->

mathserver ! {self(), {add, First, Second}},

receive

{mathserver, Reply} -> Reply

end.

Follow Dennis’ instructions to compile this code and start the server in an Erlang console, and then leave it there.

Now, to use this service is really easy from Erlang. You can really just use the mathserver:add/2 operation directly or remotely. But doing it from another language, in this case Ruby is a little bit more complicated. I will make use of JRuby to solve the problem.

So, the client file for using this code will look like this:

require 'erlang'

Erlang::client("clientnode", "cookie") do |client_node|

server_node = Erlang::OtpPeer.new("servernode@127.0.0.1")

connection = client_node.connect(server_node)

connection.sendRPC("mathserver", "add", Erlang::list(Erlang::num(42), Erlang::num(1)))

sum = connection.receiveRPC

p sum.int_value

end

OK, I confess. There is no erlang.rb yet, so I made one. It includes some very small things that make the interfacing with erlang a bit easier. But it’s actually still quite straight forward what’s going on. We’re creating a named node with a specific cookie, connecting to the server node, and then using sendRPC and receiveRPC to do the actual operation. The missing code for the erlang.rb file should look something like this (I did the minimal amount here):

require 'java'

require '/opt/local/lib/erlang/lib/jinterface/priv/OtpErlang.jar'

module Erlang

import com.ericsson.otp.erlang.OtpSelf

import com.ericsson.otp.erlang.OtpPeer

import com.ericsson.otp.erlang.OtpErlangLong

import com.ericsson.otp.erlang.OtpErlangObject

import com.ericsson.otp.erlang.OtpErlangList

import com.ericsson.otp.erlang.OtpErlangTuple

class << self

def tuple(*args)

OtpErlangTuple.new(args.to_java(OtpErlangObject))

end

def list(*args)

OtpErlangList.new(args.to_java(OtpErlangObject))

end

def client(name, cookie)

yield OtpSelf.new(name, cookie)

end

def num(value)

OtpErlangLong.new(value)

end

def server(name, cookie)

server = OtpSelf.new(name, cookie)

server.publish_port

while true

yield server, server.accept

end

end

end

end

As you can see, this is regular simple code to interface with a Java library. Note that you need to find where JInterface is located in your Erlang installation and point to that (and if you’re on MacOS X, the JInterface that comes with ports doesn’t work. Download and build a new one instead).

There are many things I could have done to make the api MUCH easier to use. For example, I might add some methods to OtpErlangPid, so you could do something like:

pid << [:call, :mathserver, :add, [1, 2]]

where the left arrows sends a message after transforming the arguments.

In fact, it would be exceedingly simple to make the JInterface API downright nice to use, getting the goodies of Erlang while retaining the Ruby language. And oh yeah, this could work on MRI too. There is an equivalent C library for interacting with Erlang, and there could either be a native extension for doing this, or you could just wire it up with DL.

If you read the erlang.rb code carefully, you might have noticed that there are several methods not in use currently. Say, why are they there?

Well, it just so happens that we don’t actually have to use any Erlang code in this example at all. We could just use the Erlang runtime system as a large messaging bus (with fault tolerance and error handling and all that jazz of course). Which means we can create a server too:

require 'erlang'

Erlang::server("servernode", "cookie") do |server, connection|

terms = connection.receive

arguments = terms.element_at(1).element_at(3)

first = arguments.element_at(0)

second = arguments.element_at(1)

sum = first.long_value + second.long_value

connection.send(connection.peer.node, Erlang::tuple(server.pid, Erlang::num(sum)))

end

The way I created the server method, it will accept connections and invoke the block for every time it accepts a connection. This connection is yielded to the block together with the actual node object representing the server. The reason the terms are a little bit convoluted is because the sendRPC call actually adds some things that we can just ignore in this case. But if we wanted, we could check the first atoms and do different operations based on these.

You can run the above code in server, and use the exact same math code if you want. For ease of testing, switch the name to servernode2 in both server and client, and then run them. You have just sent Erlang messages from Ruby to Ruby, passing along Java on the way.

Getting different languages working together doesn’t need to be hard at all. In fact, it can be downright easy to switch to another language for a few operations that doesn’t suit the current language that well. Try it out. You might be surprised.

3 Comments | By Ola Bini | In: Uncategorized | tags: erlang, java, jruby, polyglot, programming languages, ruby. | #